In today’s digital landscape, APIs (Application Programming Interfaces) are the backbone of modern applications. They enable seamless communication between services, powering everything from mobile apps to enterprise systems. However, as the demand for faster and more efficient APIs grows, performance optimization becomes critical. One of the most effective ways to boost API performance is through caching.

In this blog, we’ll explore what caching is, why it’s essential for API performance, and the different caching techniques you can implement to make your APIs faster, more scalable, and cost-effective.

What is Caching?

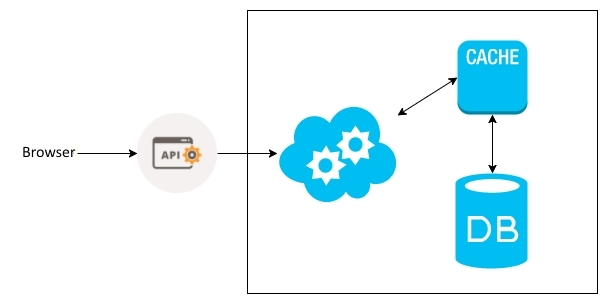

Caching is the process of storing copies of data in a temporary storage location (called a cache) so that future requests for that data can be served faster. Instead of repeatedly fetching data from the original source (e.g., a database or external API), the system retrieves it from the cache, reducing latency and improving response times.

For APIs, caching can significantly reduce the load on servers, minimize database queries, and enhance the overall user experience.

Why is Caching Important for API Performance?

- Reduced Latency: Caching eliminates the need to repeatedly fetch data from slow sources, such as databases or external APIs. This results in faster response times for end users.

- Lower Server Load: By serving cached data, you reduce the number of requests hitting your servers, freeing up resources for other tasks.

- Cost Efficiency: Fewer database queries and API calls mean lower infrastructure costs, especially when using cloud-based services that charge per request.

- Improved Scalability: Caching allows your system to handle more requests without degrading performance, making it easier to scale.

- Enhanced User Experience: Faster APIs lead to happier users, whether they’re interacting with a mobile app, a website, or an enterprise system.

Common Caching Techniques for APIs

There are several caching techniques you can use to optimize API performance. Let’s explore the most popular ones:

1. Client-Side Caching

Client-side caching involves storing data on the client (e.g., a browser or mobile app). This technique is particularly useful for static or infrequently changing data.

- How It Works: The API includes caching headers (e.g.,

Cache-ControlorExpires) in its responses, instructing the client to store the data locally for a specified period. - Use Case: Caching static assets like images, CSS files, or configuration data.

- Example: A weather app caches the current weather data for 10 minutes to avoid making repeated API calls.

2. Server-Side Caching

Server-side caching stores data on the server, reducing the need to repeatedly fetch it from the database or external services.

- How It Works: The server caches responses in memory (e.g., using Redis or Memcached) or on disk. When a request is made, the server checks the cache before querying the database.

- Use Case: Caching frequently accessed data, such as user profiles or product details.

- Example: An e-commerce API caches product details to avoid querying the database for every request.

3. CDN Caching

A Content Delivery Network (CDN) caches static content (e.g., images, videos, or API responses) on servers distributed across multiple geographic locations.

- How It Works: When a user requests data, the CDN serves it from the nearest edge server, reducing latency.

- Use Case: Caching static API responses or large files for global audiences.

- Example: A media streaming API uses a CDN to cache video files, ensuring fast delivery to users worldwide.

4. Database Caching

Database caching involves storing the results of frequently executed queries in memory.

- How It Works: The database caches query results, so subsequent requests for the same data are served directly from the cache.

- Use Case: Caching complex queries or aggregations that are expensive to compute.

- Example: A reporting API caches the results of a daily sales report to avoid recalculating it for every request.

5. Edge Caching

Edge caching is similar to CDN caching but focuses on caching dynamic API responses at the edge of the network.

- How It Works: API responses are cached on edge servers, which are geographically closer to users.

- Use Case: Caching dynamic but infrequently changing data, such as product prices or stock levels.

- Example: A financial API caches stock prices for 1 minute at edge servers to reduce latency for global users.

6. Application-Level Caching

Application-level caching involves caching data within the application itself, often using in-memory stores like Redis or Memcached.

- How It Works: The application stores frequently accessed data in memory, reducing the need to query the database or external services.

- Use Case: Caching session data, user preferences, or frequently accessed API responses.

- Example: A social media API caches user feed data to reduce database load during peak traffic.

Best Practices for API Caching

To implement caching effectively, follow these best practices:

- Choose the Right Cache Expiry: Set appropriate expiration times for cached data to balance performance and freshness. Use shorter TTLs (Time-to-Live) for dynamic data and longer TTLs for static data.

- Invalidate Cache Properly: Ensure that cached data is invalidated or updated when the underlying data changes. Use techniques like cache invalidation or write-through caching.

- Use Cache Headers: Leverage HTTP caching headers like

Cache-Control,Expires, andETagto control how clients and intermediaries cache your API responses. - Monitor Cache Performance: Use monitoring tools to track cache hit rates, miss rates, and latency. This will help you identify bottlenecks and optimize your caching strategy.

- Layer Your Caching: Combine multiple caching techniques (e.g., client-side, server-side, and CDN caching) to maximize performance and scalability.

- Secure Your Cache: Ensure that sensitive data is not cached in insecure locations. Use encryption and access controls to protect cached data.

Real-World Example: GitHub’s API Caching

GitHub’s API is a great example of effective caching in action. The API uses a combination of server-side caching and CDN caching to reduce latency and improve performance. For instance, repository metadata and README files are cached at the edge, ensuring fast delivery to users worldwide. GitHub also uses cache headers to control how clients cache responses, further optimizing performance.

Conclusion

Caching is a powerful technique for optimizing API performance. By reducing latency, lowering server load, and improving scalability, caching can transform the way your APIs operate. Whether you’re caching data on the client, server, or edge, the key is to choose the right strategy for your use case and implement it effectively.

By following best practices and leveraging the right tools, you can build APIs that are not only fast and efficient but also cost-effective and scalable. So, the next time you’re working on an API, don’t forget to consider caching—it might just be the performance boost your system needs.